Youtube Music Playlist Dumping

Via Google Play Music, I have some old Youtube Music playlists. But there does not seem to be a way to export them into a useful form. Takeout lets you export them, but you just get video IDs and a timestamp which is not immediately helpful and for uploaded music prohibitive to make sense of.

Here’s a javascript snippet that can be pasted into the web inspector while viewing a playlist on music.youtube.com, to dump the track metadata. It exports tab-separated values.

// Scroll to bottom of playlist.

// To save results, right click and "Copy Object".

// Double-check the number of results; if entries are missing, you might need to scroll further.

// (Sometimes the number of entries simply doesn't match the playlist's count. May be limited to auto playlists.)

{

const playlistName = document.getElementsByTagName('ytmusic-detail-header-renderer')[0].__data.data.title.runs[0].text;

const playlist = document.getElementsByTagName('ytmusic-playlist-shelf-renderer')[0];

const entries = ["Video Id\tTitle\tArtist\tAlbum"];

function textFromColumnIfPresent(col) {

return col.musicResponsiveListItemFlexColumnRenderer.text.runs ? col.musicResponsiveListItemFlexColumnRenderer.text.runs[0].text : "";

}

for (const item of playlist.__data.data.contents) {

const renderer = item.musicResponsiveListItemRenderer;q

const id = renderer.playlistItemData ? renderer.playlistItemData.videoId : ""; // Track may be unavailable

const title = textFromColumnIfPresent(renderer.flexColumns[0]);

const artist = textFromColumnIfPresent(renderer.flexColumns[1]);

const album = textFromColumnIfPresent(renderer.flexColumns[2]);

entries.push(id + "\t" + title + "\t" + artist + "\t" + album);

}

console.log("Found " + (entries.length-1) + " tracks");

console.log("Playlist: " + playlistName);

console.log(entries.join('\n'));

}

Vector 4 Technical Information

I recently came into possession of the Vector 4 Technical Information (P/N 7200-0001) by Vector Graphic Inc, and it is a gold mine. The Vector 4 User’s Manual and Vector 4 Programmer’s Guide seemed to have been standard issue so my family had multiple copies (one per computer), and they are already digitized by bitsavers. But the Technical Information seems to be much rarer, and seems to be oriented toward maintenance shops. But that means it has very detailed information including jumpers, clock timing, and schematics, with lots of text explaining it all. I’ve found it to be extremely helpful for working on my Vector 4.

I’ve happily digitized my copy. The schematics at the end are ledger-size pages (17" x 11") which made them difficult to scan, and unfortunately I was only able to scan them at 400 dpi. They are usable, but the originals are easier to read.

Vector 4 Keyboard Adapter

I was working on an MFM decoder for PulseView to help find bugs I introduced in FlashFloppy. That was working pretty well, but I needed some help keeping count of the current track. Using the Counter decoder on STP signal (step pulse) was pretty close to what I needed, but it wasn’t quite good enough. So I read through all the decoders. And I came across UART.

UART. Wait… Earlier when I was investigating emulating a Vector 4 keyboard I knew from past experience the signal looked pretty similar to serial (RS-232), but it wasn’t quite the same so I thought it’d be a pain to figure out how to configure some hardware appropriately. “Bit banging will be so easy!” (And then it wasn’t; but it was fun.) I hadn’t considered whether it might just be a plain UART. A quick look on Wikipedia confirms, it is totally a plain, ordinary UART.

I plug a USB to UART TTL adapter into my computer and attach the UART to the

keyboard port, fire up miniterm.py /dev/ttyUSB0 300 and… it just

works. Dang, that means it is also trivial to use the UART on the Raspberry Pi

or the

UART on Raspberry Pi Pico with Micropython

or a stm32f1 Blue Pill.

In retrospect, it should have been obvious. The Vector 4 User’s Manual even says as much:

Let’s make an adapter

My real goal is to use a modern keyboard with the Vector 4. I can already do that with USB UART and miniterm, but that requires my computer. It’d be easy to use a Raspberry Pi Zero instead, but it is an overkill and would need to boot each power-on. A microcontroller is ideal if it is easy.

There’s two options for reading from a “modern” keyboard: PS/2 and USB. PS/2 has separate data and clock and doesn’t look too bad, although it is 5V. USB requires USB host support, so the Blue Pill wouldn’t work but the Pico would work with USB On-The-Go. And I already have an adapter cable.

Some searching and I find TinyUSB has a keyboard example. And the example outputs to a terminal via a UART! All I need to do is disable some debug printfs and swap to 300 baud, and build for the Raspberry Pi Pico.

tar xf tinyusb-0.11.0.tar.gz

vim hw/bsp/board.h # change CFG_BOARD_UART_BAUDRATE to 300

cd examples/host/cdc_msc_hid

vim src/hid_app.c src/main.c # disable printfs

mkdir build

cmake -DFAMILY=rp2040 -DBOARD=raspberry_pi_pico .. # Seems fine without -DBOARD

make -j

# copy cdc_msc_hid.uf2 to Pico

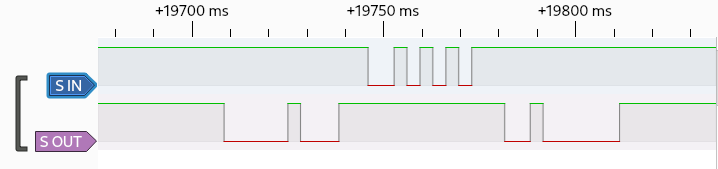

The logic analyzer confirms it is working, so now I need a physical adapter. I grab a 6P6C mount I happen to have, solder wires directly to it, and attach it to the Pico. The Vector provides 5V, which is perfect for using USB OTG and powering the Pico. (Power+ground on the right. TX on the left, with RX floating because it is 5V.)

Testing shows it works with the Vector, so then I enhance it to behave more like the original keyboard. Since the last post, I have now obtained the Vector 4 Technical Information (P/N 7200-0001) which provides all the keycodes. I also get rid of the blinking LED and have the LED signal key presses instead. At some point I’ll need to implement auto-repeat when holding down a key, but the patch is already usable.

Vector 4 Keyboard

I have a Vector 4 manufactured by Vector Graphic, Inc. It’s an old machine from the 80s that happily still runs. Or at least one out of two I have still runs.

I’ve been on a long escapade trying to preserve the device as there’s very little information archived for it online. This has included adding hard sector and Micropolis support to FluxEngine and hard sector support and async I/O handling to FlashFloppy.

In 2020 I was trying to use the machine and the keyboard wouldn’t work. The keyboard has foam pads with one conductive side and the foam had disintegrated. Thankfully other keyboards from the era, much more common than mine, have suffered the same fate and TexElec sells replacements. They are a bit finiky so some keys won’t work and I have to reopen the keyboard (with over 20 screws) to fiddle with the pads. But all the keys are currently working. Great.

But the keyboard isn’t that great to type on. It works, but pales in comparison to cheap keyboards today. How hard would it be to make an adapter to use a “modern” USB or PS/2 keyboard? The keyboard’s operation isn’t documented anywhere to my knowledge, but I figured it’d be quick to reverse engineer.

The keyboard connects to the computer with a 6P6C connector, and a board in the machine has helpful labels. The 8P8C connector is on the bottom left; ignore the pin numbers, except pin one.

Following the traces, we get:

| Pin | Assignment |

|---|---|

| 1 | GND |

| 2 | GND |

| 3 | GND |

| 4 | +5V |

| 5 | S OUT |

| 6 | S IN |

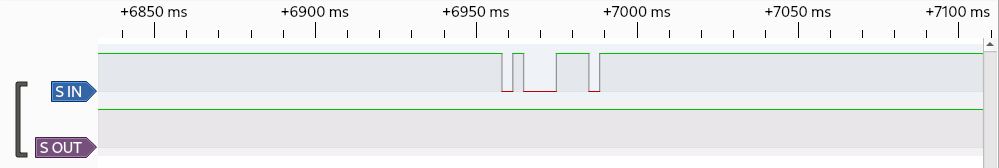

“S” would clearly mean serial, and there’s no separate clock. Maybe it’ll be like RS-232. Now let’s take a look with a logic analyzer.

Typing is at least mostly a one-way protocol. Pin is high during idle. Comparing for more button presses made the encoding clear. 0 is low, 1 is high. The bit rate is 300 Hz. It starts with a 0 bit, has 8 data bits with LSB first, and stops with a 1 bit. Data bits are ASCII characters. When holding down a key, presses are 71 ms apart. There’s no obvious source of queuing, so it’d be hard to have two letters nearer each other. My key presses are buffered, so I guess it is queued on the computer and not the keyboard. I’m not aware of an easy way to overflow that buffer as it seems to be greater than ~30 characters large. Wow. That was easy.

Now can I make a keyboard emulator? I figured I’d start with a Raspberry Pi Zero W to make entering text easy over wireless. Python should be easy, and 3ms timings seem like something it could probably handle. A quick script later and… it doesn’t work. Looking at the logic analyzer shows really bad timing. Oh well… let’s port it to Go. Surely a quick go program will work. Nope. Still poor timing. Much better. But not good enough. Busy looping and nice levels didn’t help. Shucks.

Well, I was wanting a project to use a Raspberry Pi Pico. It has micropython; I wonder what that’s like. A quick port to micropython and… it works! The computer displays “KBD error”, but the keyboard works regardless.

What’s the point of S OUT? Just after cold boot starts the computer sends 0x08 (BS?). But there’s no response. Later the computer sends 0x10 (DLE? surely not), the keyboard replies 0xAA, and the computer sends 0x02 (STX?). That exchange seems to be the KBD test and can be triggered separately with a ‘T’ Test mode instead of the normal HDD or FDD bootup. The keyboard beeps during this exchange. After pressing ‘W’ to choose to boot from the “Winchester” HDD the computer sends 0x02 (STX?). Also no response. Seems S OUT data is some custom meaning and just uses powers of two. The 0xAA response has alternating bits so is good for testing.

The Raspberry Pi Pico isn’t 5V tolerant, so I won’t bother any more with S OUT. USB or PS/2 can be for some other day, but it is quite feasible.

In a follow-up post I make the adapter.

Hosting my own SMTP server

I discussed previously how I found myself searching for a way to provide email notifications from my servers. I had previously been using my Gmail account, and I realized I didn't want the servers to have access to my Gmail account any longer.

Since I already had a domain name, I wanted to try out hosting an SMTP server. While the idea of self-hosting the SMTP server seemed crazy to me, for this limited use-case it actually turned out well and was relatively easy! Being counted as spam hasn't been too large a problem and the configuration wasn't too bad.

The Apprehension

I had previously hosted my own email server in 2004. And I hated it. Outgoing emails could easily be marked as spam and incoming emails were virtually all spam. It wasn't serving much purpose, so I had turned it off.

Mail transfer agents (MTAs), which is what I'm needing here, are also notoriously obscure and complicated to configure properly. There are also many to choose from and there's generally no "cookie cutter" configuration, so the task requires learning a reasonable amount each option in order to determine a preference. I'm maybe willing to learn one, but I'd rather not sink a bunch of time into an option just to discover I hate it. Or that I hate all the options.

I had also expected things had gotten worse since I last delved into it, since there's been new standards to address spam and I have little idea how they work, although I know some use cryptography. Given how painful TLS is to configure (and how painful it was to get a certificate before Let's Encrypt), that sounds awful.

Virtually everyone on the Internet agrees that the best way to avoid ending up in the spam folder is to use a well-established email provider. I need this for possibly-important notification emails; I will have a very low tolerance for them going to spam. I worried even after all the effort of setting things up, too many emails go to spam and I have to scrap it.

SPF (IP Whitelist)

As part of my research, I had taken took a quick glance at SPF. It was easy enough that I set it up immediately. SPF is simply a whitelist of IP addresses that send email for your domain, and published via DNS.

The detailed record

syntax is a good reference after you see an example. You publish it as a TXT

record for your domain. So I had a TXT for @ for my ersoft.org

domain. A simple value to say "mail should come from IP 1.2.3.4" would be

v=spf1 ip4:1.2.3.4 ~all.

Since explicitly listing IP addresses is annoying to update, you can refer

to a hostname with something like a:example.ersoft.org. You can

also refer to servers in your MX records with mx. And you simply

list multiple things before the ~all to allow them, like

v=spf1 ip4:1.2.3.4 ip4:1.2.3.5 a:example.ersoft.org mx ~all.

If you want to see published values for various domains (or your own), you

can use dig via dig ersoft.org txt.

You should probably avoid the stricter -all. Legitimate email

forwarding by others breaks SPF. For example, I can use Google

Domain's email forwarding feature to forward ejona@ersoft.org to my Gmail

account. If you send an email to ejona@ersoft.org, that email would be

forwarded to my Gmail account by a Google Domain server with you as the sender.

But your SPF policy doesn't allow Google Domains to send emails for your

account, so your SPF will fail because of my configuration. And if you have

DMARC enabled, you'd receive failure reports. This is a fatal flaw of SPF. And

so we limit the negative impact of SPF with ~all.

OpenSMTPD

As I glanced at various SMTP servers, I came across a two-line configuration snippet on the Arch Linux wiki for an OpenSMTPD server acting as a mail relay. It seemed pretty comprehensible so trying out OpenSMTPD seemed worth a try. And it turned out great.

The smtpd.conf documentation is really nice. Between the default smtpd.conf and it I quickly had my initial configuration, which was simply:

listen on localhost

table white-sender {"@ersoft.org"}

table white-recipient {"example@gmail.com", "@ersoft.org"}

accept sender <white-sender> for any recipient <white-recipient> tls verifyI was very concerned about being marked as a spammer, so I wanted the white lists before any testing. It would have been very easy to have test emails that were "bad" (like wrong "from" address) and I didn't want those to go to Google. So the configuration only listens on localhost and only allows outgoing mail from ersoft.org addresses to the whitelisted Gmail account or to my domain.

I needed a test command. I used:

echo "Subject: sendmail test; unique-test-desc" | \

sendmail -v -f noreply@ersoft.org example@gmail.com

And it failed! But with a helpful link to Google's Guidelines for IPv6. I was actually really happy to see this, because the documentation was really helpful for more than IPv6.

So the problem was that IPv6 requires PTR records. While I may mess

with that eventually, I don't want to do it out-of-the-gate. So I limit myself

to using IPv4 by adding a configuration line: limit mta inet4. And

then my test email arrived! (Note: I already had SPF configured here; I don't

know how important it was.)

At this point there's an email in my inbox that I can inspect. In the email drop-down menu I clicked "Show original" to see the raw MIME email. This was helpful to see that the sender and receiver were correct. But looking at "Authentication-Results" was also helpful. In this email, I saw a "spf=pass" with some additional information that is useful for debugging. I was also able to confirm TLS was used by seeing "version=TLS1_2 cipher=ECDHE-RSA-AES128-GCM-SHA256" in the "Received"). When you get there, you can also use it for DKIM and DMARC debugging. If you are new to email, understand that each server the email passed through will prepend additional headers. So, for example, there are generally multiple "Received" headers, and the topmost one was added by the last server.

That was really easy! Now it was iteration and improvement.

DKIM (Cryptographic Message Signing)

DKIM is a signature-based mechanism to prove that an email originated from a particular source. Interestingly, DKIM can be used to sign emails even if the email being signed is from a different domain. This allows Gmail, for instance, to send an email impersonating an @ersoft.org address, but the DKIM would show it coming from a gmail.com server. So the receiver would then have a clear view: ersoft.org did not send the email, but if gmail.com is reasonably trusted, it can be accepted anyway.

DKIM publishes public keys via DNS as a subdomain of the _domainkey subdomain. The creation and publishing of keys is fairly easy, especially compared to things like TLS. Most of the effort comes from configuring your server to provide the signatures using they key, and that's not onerous.

OpenSMTPD provides an example using dkimproxy and dkimproxy provides reasonable documentation. Note that Google suggests using 2048 bit keys, so you may want to use "2048" instead of the "1024" in the "openssl genrsa" command when following the dkimproxy documentation.

I used a dkimproxy_out.conf like:

listen 127.0.0.1:10027

relay 127.0.0.1:10028

domain ersoft.org

keyfile /etc/dkimproxy/private.key

selector example

"Selector" would match the DNS name used, so "example._domainkey.ersoft.org"

(which doesn't exist) in this case. You can use dig via dig

example._domainkey.ersoft.org txt.

Unlike the example documentation, I'm using filtering, so I found it useful

to use tagged ! DKIM since otherwise it seemed possible to form an

infinite loop:

listen on localhost

listen on localhost port 10028 tag DKIM

...

accept tagged DKIM sender <white-sender> for any recipient <white-recipient> tls verify

accept tagged ! DKIM from local for any relay via smtp://127.0.0.1:10027Note that I'm filtering after adding the DKIM signature. Since it is possible for someone on the same machine to write to dkimproxy directly, I am considering the results from the proxy "untrusted."

DMARC (Enforcement and Reporting)

DMARC specifies what to do with emails that have SPF or DKIM issues and how to report them, published via DNS. For instance, if you misconfigured your SPF and Google was flagging emails, you'd like to be notified instead of just happening to notice emails were being rejected. While the DMARC standard allows for multiple types of notifications, the main option is to specify an email address that should receive aggegrated daily digests independent of whether there are problems. Since that'd be annoying to go to a personal email account and you really need to process the raw data, there are services that you can route these emails to and can provide pretty graphs.

DMARC also uses a TXT record in DNS, but with the _dmarc subdomain (so

_dmarc.ersoft.org for me). If you are comfortable with your setup, a strict

value would be v=DMARC1; p=reject; rua=mailto:example@ersoft.org

with a proper email address. The standard

disallows

reporting emails to go to a different domain, but that is different from an

earlier draft and I suspect servers may allow it anyway. But I've also not

needed to investigate this for my set up. I found

G Suite's

documentation to be most clear in describing the useful options.

If you want to see published values for various domains (or your own), you

can use dig via dig _dmarc.ersoft.org txt.

Final notes

To support extra machines, you can either set up each with it's own server (and its own DKIM selector), or you could allow them to use the first machine's SMTP server. I went with the latter option. I considered using client certificates, but simple username/password seemed about as effective and easier to setup and manage.

If using Let's Encrypt for TLS certs, note that OpenSMTPD is particular

about the permissions of the private key file. You can fix the permissions via

chmod o-r /etc/letsencrypt/live/example.ersoft.org/privkey.pem.

You can configure /etc/letsencrypt/renewal/example.ersoft.org.conf

to include a renew_hook within [renewalparams] to

automate the "fix".

Also, if you're interested in using file-based tables in OpenSMTPD, I'll

note that smtpctl update table <tablename> didn't actually work

for me. I just restart OpenSMTPD instead.